🔥 What's New ?

👉 An important change in GTM: automatic loading of Google tags in GTM as of April 10

What's new?

A significant change is coming to the way GTM works with Google tags.

From April 10, 2025, GTM containers will automatically load a full Google tag before transmitting Google Ads and Floodlight events.

In reality, GTM has already been doing this for a long time, but with one major difference: currently, the auto-loaded Google beacon has several features disabled (no automatic page view, inactive enhanced metrics). After April 10, the auto-loaded Google beacon will have all configurations enabled as defined in the settings.

How does it work?

The process will follow this logic:

- When an event is triggered, GTM checks whether the corresponding Google tag is already loaded.

- If yes, the event is triggered normally

- If not, GTM automatically loads the missing Google tag with its full configuration before triggering the event

What are the advantages of this modification?

- More reliable Google Ads tracking

- Easier activation of functions (advanced conversion tracking, multi-domain tracking, automatic events)

- Optimized data collection - if you have accepted the UPD, data collection will be automatically activated.

What to do?

We recommend that youadd the Google tag to your GTM containernow, to assess the impact of this change before it takes effect automatically on April 10.

If you wish to disable the collection of user supplied data (UPD), you can always do so in the Google tag settings or in the Ads/Floodlight product settings.

As always, please don't hesitate to contact us if you have any questions about this update.

ℹ️ For more information, visit https://www.simoahava.com/analytics/clarification-on-google-tag-manager-google-tag-update/

👉 Collecting more data is good, collecting quality data is better. If you don't have a Google Tag, add one. If not, make sure it runs before your media tags, and stay in control of the data you share with third parties and your CRM. The quality of your data is your competitive advantage.

👉 Google Analytics 4: Report and data configuration transfers previewed on BigQuery

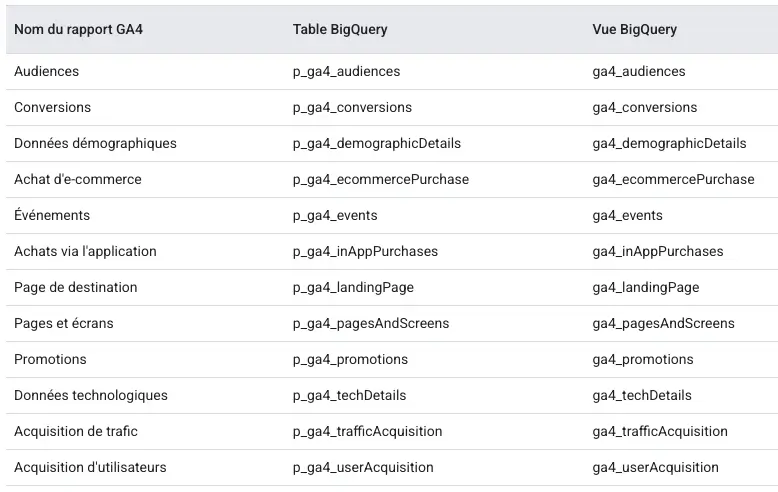

BigQuery has set up automatic transfers of the following configured GA4 reports:

Audiences, Events, User Acquisition, Ecommerce purchase, sDemographic details, In-app purchases, Landing pages, Pages and Screens, Tech details, Promotions, Traffic Acquisition, Conversions.

Transfers are updated daily at the time they are created, but it is possible to set a specific time.

By default, the update interval is 4 days (most recent), and can be extended to 30 days.

ℹ️ Refresh interval: this is simply the number of days of data that BigQuery will retrieve during each transfer. Here's how it works:

- If you define a 4-day interval, each transfer recovers data from the same day AND from the previous 3 days.

- On the first transfer, all data for the last 4 days is retrieved.

- For the following transfers, BigQuery updates recent data and also automatically refreshes the days included in the interval

For older data(outside the update interval), you'll need to run a manualbackfill operation.

How does it work?The principle of these new transfers remains the same:

- GA4 data are stored in BigQuery tables partitioned by date.

- Each transfer automatically goes to the right partition (dated section)

- If you're running several transfers on the same day, don't worry! The system simply replaces the old data with the most recent.

- No risk of duplication, even if you perform multiple transfers or data fills

- The other dates remain intact and well organized.

How do I activate the transfer?

- Go to BigQuery Studio → Data Transfers → Create Transfer → Choose Google Analytics 4.

- Select your project, data set and update frequency.

⚠️ If you wish to be notified if the transfer is unsuccessful:

- Click on the button to activate e-mail notifications. When you activate this option, the transfer administrator receives an e-mail notification in the event of a failed transfer.

- Click on the button to activate Pub/Sub notifications. In the Select Cloud Pub/Sub topic field, choose the name of your topic, or click on Create topic. This option configures Pub/Sub runtime notifications for your transfer.

What does it look like?

Once transferred to BigQuery, your Google Analytics 4 reports are converted into BigQuery tables and views, as illustrated below.

💡 We believe that BigQuery is a major asset for analyzing user behavior with GA4 data. Our priority with these new transfers: to verify data consistency between BigQuery and the GA4 interface, while maintaining the level of detail needed to generate value.

👉 Annotations are finally available on GA4

It's a feature we've all been waiting for since the migration to GA4, and it's finally here.

Annotations can now be used to :

- Document important events (campaign launches, site updates, etc.).

- Explain unusual variations in your data

- Share key observations with your team

- Contextualize your reports for better analysis

Two simple methods for creating annotations

- Directly in the interface: right-click on any graph in your GA4 reports and select the annotation option

- Via API Admin: for advanced users who want to automate annotation creation

ℹ️ To create and modify annotations, you need the roleEditor (or higher)at property level. To display them, you'll need the"Reader (or higher). Each property is limited to 1,000 annotations. It is therefore necessary to prioritize the information to be annotated.

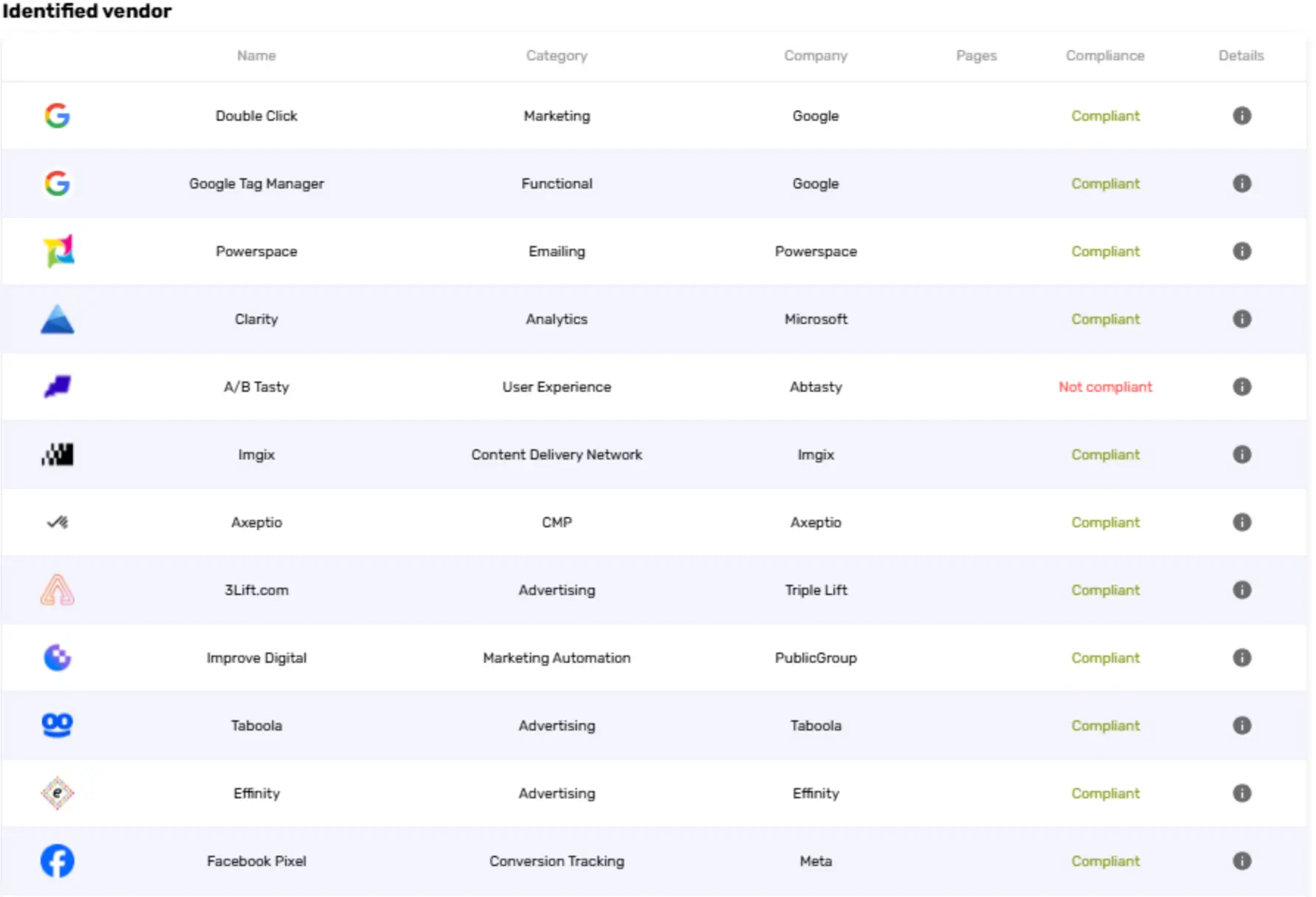

👉 Shake: Axeptio's new tool that checks RGPD compliance

Axeptio has launched Shake, a free cookie analysis tool. In just a few steps, it enables you to assess your website's compliance. Simply enter your site's URL in the interface, and the scanner automatically analyzes all cookies present, classifying them by data collection purpose. You'll then receive a full compliance report by email, along with a customized cookie banner preview.

We've done the test for you, and here's what the generated report looks like:

👉 At Starfox Analytics, we believe this kind of tool represents a practical solution for quickly obtaining an overview of the cookies used on a site. For companies with limited resources, it's an affordable first step towards compliance. However, we note that there are several limitations, for example: these automatic scanners often don't capture all cookies, especially those loaded dynamically via JavaScript or after user interaction. A single scan can easily miss 20-30% of actual cookies, or it categorizes cookies differently. And finally, an automatically generated report is no substitute for a proper consent management and data protection strategy.

💡 Tip of the month

Our "Tip of the month" section shares practical tips used daily at Starfox Analytics. These tips cover various Web Analytics tools to optimize your work. Don't hesitate to try them out and share them with your colleagues.

The must-have JavaScript hack for every analytics pro

Here's a JavaScript one-liner used by our tech lead Daniel that will transform the way you work:

To audit GA4 configurations, the best-known manual methods are tedious:

- Digging into Chrome's DevTools

- Navigate through countless signs

- Search for GA4 identifiers one by one

- Risk missing hidden configurations or follow-ups

But there's a much smarter approach. Here's a JavaScript one-liner that will transform the way you work:

This simple line of code instantly reveals all GA4 property identifiers implemented on any website.

Object.keys(window.google_tag_manager || []).filter(e => e.substring(0, 2) === 'G-')This little piece of code allows you to :

- Discover all GA4 properties immediately

- Detecting phantom implementations

- Validate configurations in different environments

- Prevent data contamination between properties

📖 Sharing Is Caring

Every month, our "Sharing is Caring" column presents an in-depth article on a current topic in Web Analytics. Our experts use their know-how and online resources to explore these topics in detail.

This month, discover Oussama's article explaining the subtleties of data management after the implementation of Consent Mode V2.

❤️ Best resources and articles of the moment

- dbdiagram: A site for creating diagrams of your databases

- A Looker Studio dashboard template to showcase product performance on Google Ads and Merchant Centers.

- A list of interesting Chrome commands for understanding and debugging SEO/WEB performance.

- Prepare for the Analytics Engineer interview with this article.

🤩 Inside Starfox

🐞 Data Bugs

At Starfox, our obsession with Lean is reflected in every detail of our organization. Each bug is not just corrected: it is documented, analyzed and transformed into a source of collective learning. This is the purpose of our bug data log, a structuring tool that centralizes detected anomalies, their business severity, resolution times, root causes and the environments concerned.

This rigorous monitoring enables us to calculate a "power" score for each bug, but above all to capitalize on our errors to accelerate detection, reduce lead time and avoid production incidents.

👉 Of course, it's always better to detect an anomaly during the internal acceptance phase rather than in post-production, when it's reported by the customer.

📍 Example #1 - GA4: Falling user numbers

A default value user_id = "no_value in GTM grouped all non-connected users under a single ID in GA4, resulting in a significant underestimation of unique users.

✅ Immediate removal of fallback + implementation of Trooper (our in-house monitoring tool) to prevent drift on deduplication keys.

⏱️ Detected in production by the customer. Lead time between detection and resolution: 4 hours. Total time between bug appearance and resolution: 216 hours (9 days).

📍 Example #2 - JS Error loop: Addingwell surcharge

A tag triggered an event in a loop error_eventgenerating 2.7M GA4 hits in just a few hours.

✅ Tag disabled + server-side JS error exclusion.

⏱️ Detected in production by the customer. Lead time between detection and resolution: 2 hours. Total time between bug appearance and resolution: 6 hours.

📍 Example #3 - GA4 vs Looker Studio: Inconsistent metrics

Deviations came from the use of user_id (GA4) vs. user_pseudo_id (BigQuery), creating confusion when counting users.

✅ Query corrected with COALESCE(user_id, user_pseudo_id) + integration into our standard templates.

⏱️ Detected in pre-production by our in-house teams. Lead time between detection and resolution: 3 hours. Total time between bug appearance and resolution: 8 hours.

A need, a question?

Write to us at hello@starfox-analytics.com.

Our team will get back to you as soon as possible.

.svg)

.svg)