👋 In this document you'll discover the concept of vector search in a fairly simple way and the different cases of its use.

Language is often ambiguous and imprecise. Two words can have the same meaning (synonyms) or the same word can have several meanings (polysemy). In French, for example, "lourd" and "pesant" can have similar meanings, but "lourd" can also have several different meanings: heavy, tiring, painful, difficult to bear, even coarse.This inherent complexity and ambiguity of natural language poses a major challenge for computer systems that have to process and understand text. This is where vector search comes in, a machine learning technique that is increasingly used in a wide range of applications, including search engines, content recommendation and text analysis. This innovative approach captures the semantic nuances and multiple meanings of words in a way that computers can process efficiently.

But what exactly are we talking about?

What is vector research?

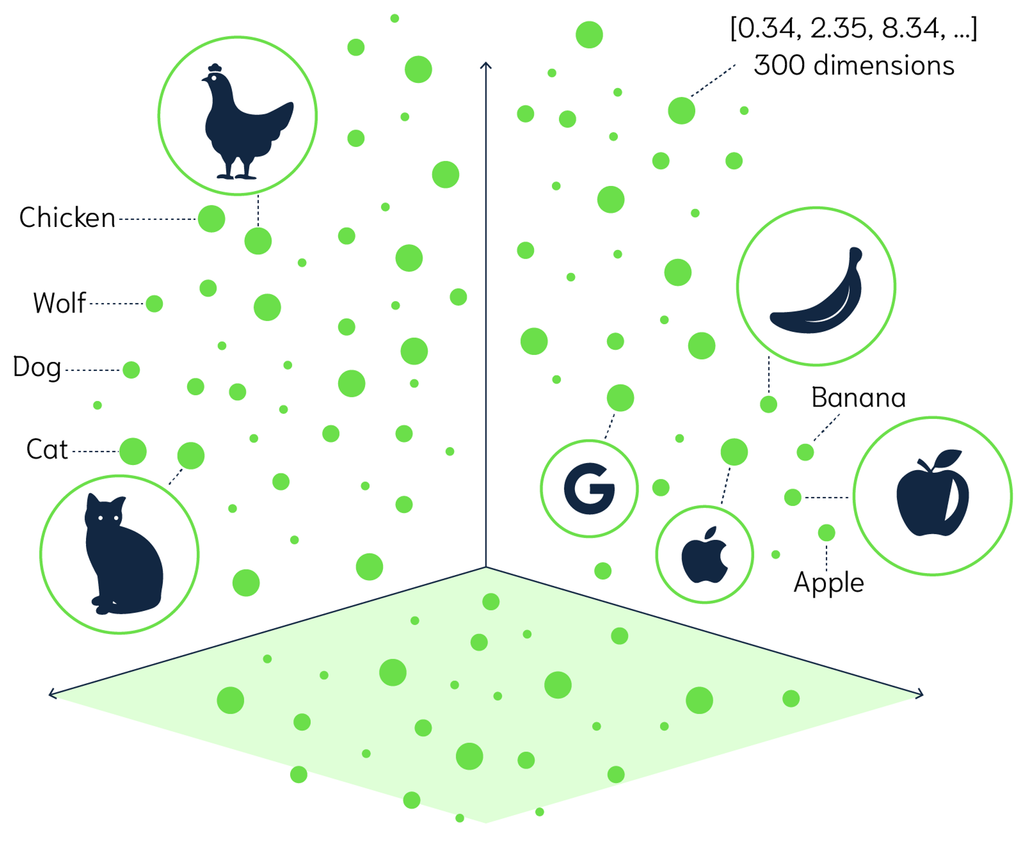

Vector search is a technique that transforms words, phrases or documents into numerical representations called vectors. These vectors capture the essential features of the text in a way that computers can process efficiently.

Vector search process :

- Creation of embeddings: Text data is transformed into embeddings, which are digital representations capturing meaning and context.

- Vector generation: These embeddings are then expressed as vectors, which are lists of numbers.

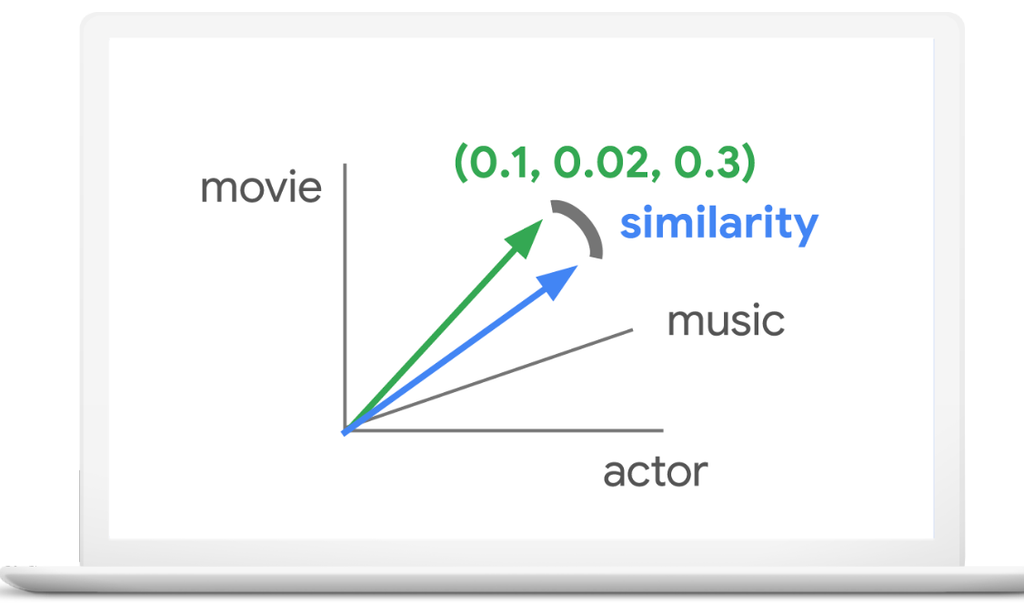

- Comparison: Vectors can be mathematically compared to assess the similarity between the texts they represent.

From words to vectors: the magic of embeddings :

Embeddings are the heart of vector research. They are dense digital representations that capture the meaning and context of words or phrases, but how do you get from text to these famous vectors? That's where embedding techniques come in.

Here's an overview of the main methods:

1. Word2Vec: This pioneering technique analyzes word co-occurrences to create vectors that capture semantic relationships.

2. BERT (Bidirectional Encoder Representations from Transformers): Developed by Google, BERT takes into account bidirectional context to generate richer embeddings.

3. GPT (Generative Pre-trained Transformer): This family of models, including the famous GPT-3, excels in text generation and the creation of contextual embeddings.4.VERTEX AI: Several models are made available by Google to generate embeddings in several languages.

Comparison measurements :

Cosine of angle : This is the most commonly used similarity measure. It calculates the cosine of the angle between the two vectors. The more the vectors are aligned (small angle), the greater their similarity.

Euclidean distance: This is the geometric distance between two vectors in multidimensional space. The closer the vectors, the greater their similarity.

Practical applications: vector research in everyday life

Vector research has many practical applications:

- Search engines and recommendations: Leading search engines such as Google and Bing use vector search to perform semantic searches to better understand user queries and propose more relevant results.

- Recommendation systems: Netflix, Amazon, etc. use vector search to find products, films or articles similar to those we've already viewed or enjoyed. Their algorithms analyze content vectors to identify the ones that best match us.

- Conversational assistants: Chatbots and virtual assistants like Alexa or Siri don't understand exactly what's being said to them, but they do use vector search to understand the meaning of phrases and questions asked by users. They compare vectors of queries with vectors of possible answers in their knowledge base to find the most appropriate response.

ℹ️ Modern search engines such as Google Search, Bing, Yahoo, DuckDuckGo, etc. all use vector search engines to provide users with the most relevant results possible.

A need, a question?

Write to us at hello@starfox-analytics.com.

Our team will get back to you as soon as possible.

.svg)

.svg)